Pushing prompts that haven’t been optimized for efficiency and output controls into a production environment is unfortunately a common and wasteful practice that drives token budgets and kills progress.

The Gallahue method to fix and address these problems is simple yet effective in removing wasted tokens and improving overall outputs.

Scenario:

Let’s imagine I’m creating a website and I want to list the 10 best restaurants in every major city by cuisine type. Lacking the time and budget to visit and catalog these places personally, I write two prompts to source the web and generate JSON output I can quickly loop using N8N or another tool and then patch into a CMS.

Prompt 1:

For New York City, I need a bullet point list of the 10 best restaurants overall and the 10 best restaurants for the following cuisine types: American, Chinese, Sushi, Pizza, Steakhouses. Output as a JSON with entry for each restaurant under corresponding list.

Prompt 2:

For Eleven Madison Park, I need the following details: Year opened, cuisine type, average price point, star rating, executive chef, acclaimed dishes and a 150 word summary detailing why the restaurant is seen as exemplary. Output as a JSON with items for each point.

(Note: For both examples I used a single prompt but the setup in practice would be a system and user prompt where city/restaurant value is specified and of course websearch enabled)

Leveraging the Gallahue Method to Improve Prompt Output

On the surface, both prompts seem to be reasonable in the sense that the average person can read them and understand what’s being asked and what is the expected output.

However both have multiple flaws that will lead to degraded and poorly articulated output so let’s use my method to refine:

The Gallahue Method (DGRC) for Prompt Optimization:

- Define – Strictly define inputs and outputs

- Guide – Provide LLMs with easy paths to resolution

- Review – Check prompt for ambiguity until cleared

- Compress – Refine optimized prompt into simplest form for token efficiency

Defining Inputs and Outputs

Both prompts struggle from a lack of defined inputs and output. Specificity is critical to ensuring you get output that is of value and not a waste of tokens.

Silence of the Lambs is actually a weird but effective way to think about managing definitions. In a pivotal scene, Hannibal Lecter summarizes Marcus Aurelius by saying “Ask of each thing: What is its nature?” and that’s how you should approach writing production ready prompts.

Is this word, ask, input, output requirement clearly defined to the point that if I run this prompt 100 times on different accounts with no personalization, I will get the same answer format every time? If not, how I change it so that output normalizes?

Let’s look at how the define exercise works in practice by highlighting ambiguous or poorly defined terms:

Prompt 1:

For New York City, I need a BULLET POINT LIST of the 10 BEST restaurants OVERALL and the 10 BEST restaurants for THE FOLLOWING cuisine types: American, Chinese, Sushi, Pizza, Steakhouses. Output as a JSON with ENTRY FOR EACH RESTAURANT under corresponding list.

Bullet point list: While this is a tangible output structure, what specifically is the output? Is it the restaurant name? Is it a restaurant name and address? Do you want an ordered ranking list and a summary of the restaurant? If so what would be the criteria.

In this instance, the LLM must make a best guess as to what you want and depending on model and mode, you’ll either get a compact list or a 2,000 word dissertation on fine dining spots.

Best/Overall: Poor word choice is a mistake that even the most skilled prompt engineers will make. Ambiguous but well meaning terms like best or overall are open to interpretation and in my experience, LLMs will inference you’re looking for a composite of a brand with high level of exposure and high review counts and ratings on Yelp/G2/Trustpilot/Capterra which may not always align with your thinking.

In this case, New York City has no shortage of highly rated/reviewed restaurants and you could make individual lists for Midtown, Brooklyn, Chelsea etc … and have no degradation in quality of inclusions.

In this example one might replace best or overall with the requirement of a rating of 4.6 or higher on a specific site(s) and open at least 3 years to refine the list.

The Following: Absolutely critical point here … when you present LLMs with a list and do not gate the ouput, it’s likely you will get additional content that does not conform to what you want.

LLMs are instructed in system logic to be accommodating and because of this, they will often assume you would want additional output unless instructed otherwise. In this case because you want to build a recommended restaurant list … the LLM completing the prompt could naturally assume you want to cover all cuisine types and add Indian, Thai or vegan to the output.

Hence why you should always gate your output. You can use terms like: “Do not include additional categories” “Only generate for the items in this list” etc …

JSON Entry for Each Restaurant: The issue here is the exact same one we just identified in the first example where we ask for output but don’t define exactly what we want and thus leave LLMs to infer what we want based on the pattern.

Furthermore we haven’t defined that we only want the JSON and depending on LLM and settings, we may get summary text, introduction text or status confirmations that do not conform to our needs and output requirements.

Let’s take our definition exercise to the next prompt:

For Eleven Madison Park, I need the following details: Year opened, cuisine type, average price point, star rating, executive chef, acclaimed dishes and a 150 word summary detailing why the restaurant is seen as exemplary. Output as a JSON with items for each point.

I know 95% of the prompt is highlighted but it will make sense as we go line by line:

Eleven Madison Park: The restaurant has a unique name but if this were to run for a common restaurant name like Great Wall, Taste of India or Hibachi Express (all of which have 30+ unrelated restaurants in the United States) you would quickly introduce a best guess situation where models have to inference which location is correct. In this example, including an address as part of the prompt would remove any chance of location inaccuracy.

Cuisine Type: In this instance we have set a category in the previous prompt but we are not asking the subsequent prompt to conform to the

Average Price Point: The ambiguity here is that we don’t explicitly say whether we want the average cost of a meal and whether to output a range or specific dollar amount of whether we want a tiered metric like $, $$, $$$ (aligned of course to cost tiers we would set) that you commonly see in restaurant guides.

Star Rating: When it comes to restaurants we could be referencing Michelin stars or a traditional 1-5 star value. Second if we are referencing the traditional star value then we need to explain where to pull that value from and whether it’s a single source or composite.

Executive Chef: Restaurants commonly post this on web, press and social materials but in the instance they do not or they have recently parted ways with said individual … we need to give resolution path so that the LLM doesn’t waste tokens going down a rabbit hole trying to find your answer. For something like this, I’ll commonly put a line like: “If a value cannot be determined then output NA”

Acclaimed Dishes: In the previous section, we talked about terms like best/overall and what LLMs might inference from them. Acclaimed is another example that while it seems defined, it is still open to vast interpretation. Are you looking for dishes specifically referenced in high value source reviews or looking for the most commonly mentioned dishes in a review set? Again another point to define.

150 Word Summary: In the request we want a 150 word summary as to why the restaurant is exemplary. We need to define exemplary and to that we should provide LLMs with example points to highlight: Does the restaurant have a Michelin star? Has it been voted as a top 100/50/10 restaurant in the world or local market in the following sources ( ) …

Output as a JSON: Same feedback as above where you need to make sure that only the JSON is output and no intro text, summaries, notes etc …

Guiding LLMs

The second component to improving efficiency and quality of LLM output is to guide LLMs to key sources they can rely upon for conclusions.

When it comes to restaurant reviews and best of lists, especially for major markets, there’s no shortage of sources from Michelin Guides to YouTube videos shot in someone’s car of hot takes, dish reviews and tier rankings.

That being said when you are asking a LLM to put together a list like this, it might default to sources that aren’t the most valuable. For instance LLMs tuned to favor certain sources may choose to source from Reddit as a priority over Resy, Eater or The New York Times. In that case you would get snippet content from anonymous accounts over long form details from authors with names and a contextual list of works.

Unfortunately most people don’t guide their prompts with preferred sources and that leads to issues like the one above as well as wasted reasoning tokens as LLMs try to work out what sources would be best for a request and then search for relevant blocks on those sources.

How to “Guide”

Guiding is simply appending what sources you believe are best aligned to the output you want to achieve.

If I want to generate content about the 5 best new SUVs for families I can specify that my preferred sources or only sources to consider for composite research are Consumer Reports, Car and Driver and Motor Trend.

Subsequently I can also list sources to ignore. For instance let’s say I want to generate a balanced content piece talking about George W Bush’s presidential legacy. I will want to remove sources that will both overly praise and criticize his time in office in favor of sources that are more objective and in line with what I want to generate.

By doing this, we take the guesswork out of LLMs trying to inference what is a ‘best’ source for an item and instead go straight to search and processing.

Review Prompts with LLMs

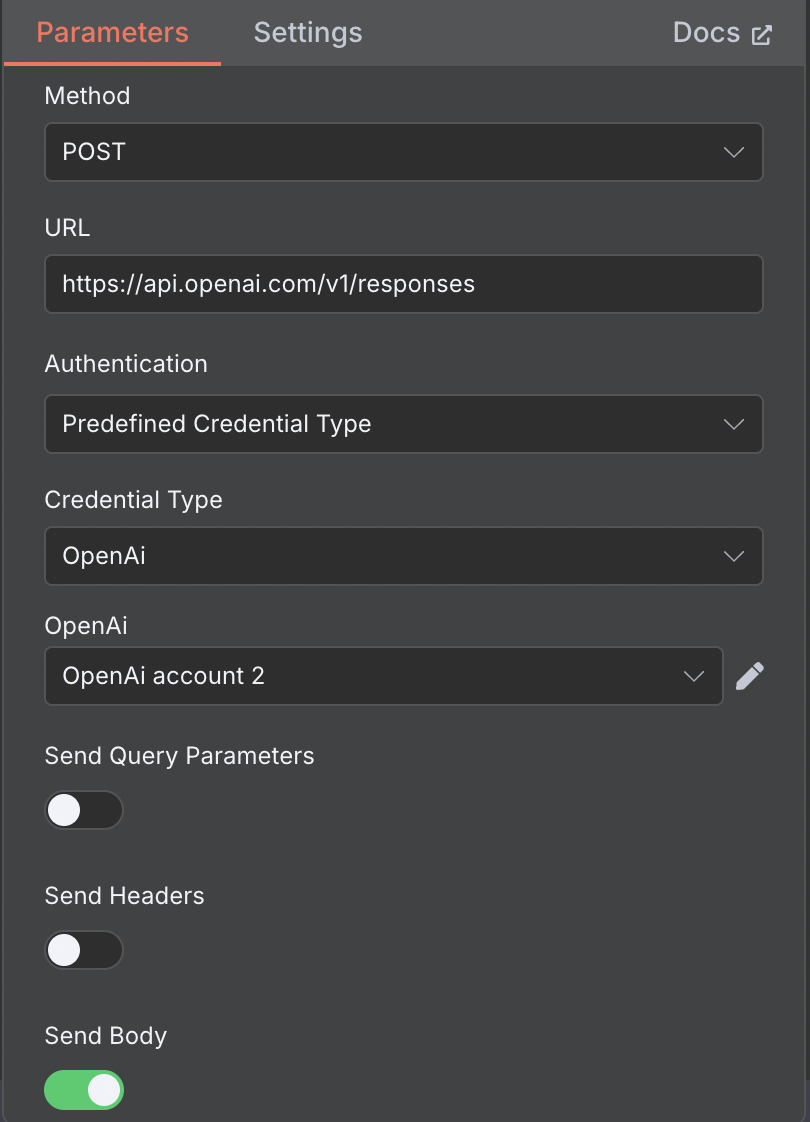

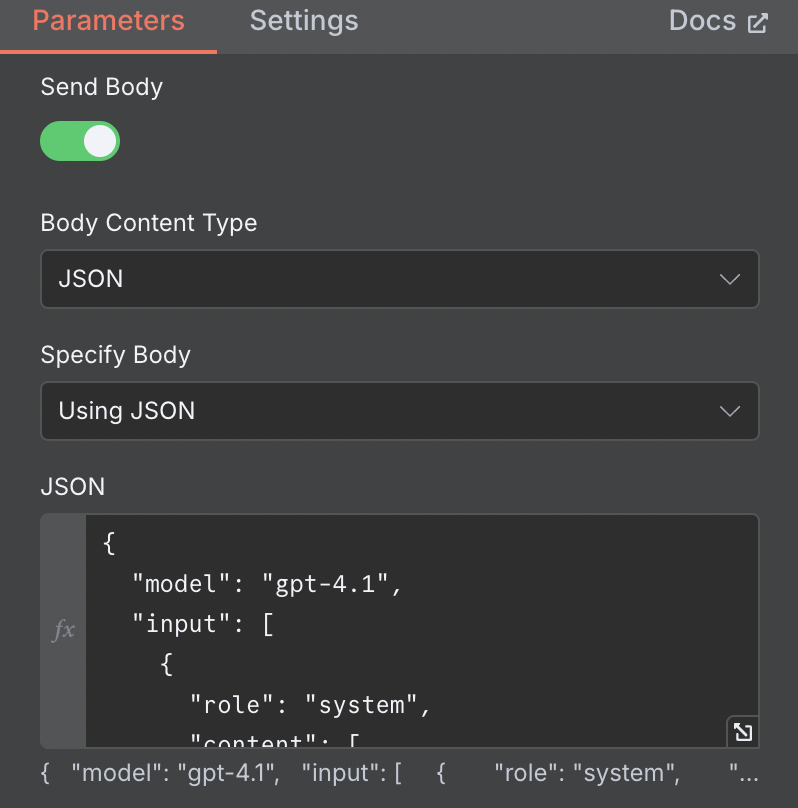

Before you even ask LLMs to generate a single piece of output, you should always put your prompt into their system and ask it to review for points of ambiguity.

Iterating a prompt that is functionally deficient is a waste of resources whereas building the right prompt and fine tuning output is the much more efficient path.

Here’s the prompt I use to critically analyze my work:

You are a Prompt Auditor. Evaluate the target prompt strictly and return JSON that follows the schema below.

RUNTIME ASSUMPTIONS

- Timezone: America/Chicago. Use ISO dates.

- Reproducibility: temperature ≤ 0.3, top_p = 1.0. If supported, seed = 42.

- Do NOT execute the target prompt’s task. Only evaluate and produce clarifications/rewrites.

DEFINITIONS

- Components = { goal, inputs_context, constraints, required_process, websearch_dependencies, output_format, style_voice, examples_test_cases, non_goals_scope }.

- Ambiguity categories = { vague_quantifier, subjective_term, relative_time_place, undefined_entity_acronym, missing_unit_range, schema_gap, eval_criteria_gap, dependency_unspecified }.

- “Significant difference” threshold (for variance risk): any of { schema_mismatch, contradictory key facts, length_delta>20%, low_semantic_overlap }.

WEBSEARCH DECISION RULES

- Flag websearch_required = true if the target prompt asks for: current facts/stats, named entities, “latest/today”, laws/policies, prices, schedules, or anything dated after the model’s known cutoff.

- If websearch_required = true:

- Provide candidate_domains (3–8 authoritative sites) and query_templates (2–5).

- If you actually perform search in your environment, list sources_found with {title, url, publish_date}. If you do not perform search, leave sources_found empty and only provide candidate_domains and query_templates.

OUTPUT FORMAT (JSON only)

{

“ambiguity_report”: [

{

“component”: “”,

“span_quote”: “”,

“category”: “”,

“why_it_matters”: “<1–2 sentences>”,

“severity”: “”,

“fix_suggestion”: “”,

“clarifying_question”: “”

}

// …repeat for each issue found

],

“variance_risk_summary”: {

“risk_level”: “”,

“drivers”: [“”],

“controls”: [“Set temperature≤0.3”, “Specify schema”, “Pin timezone”, “Add ranges/units”]

},

“resolution_plan”: [

{

“step”: 1,

“goal”: “”,

“actions”: [“”],

“acceptance_criteria”: [“”],

“websearch_required”: ,

“candidate_domains”: [“”, “…”],

“query_templates”: [“”, “…”],

“sources_found”: [

{“title”:””,”url”:”<url>”,”publish_date”:”YYYY-MM-DD”}] } // …continue until the prompt is unambiguous and runnable], “final_rewritten_prompt”: “<<<A fully clarified, runnable prompt that incorporates fixes, constraints, and exact output schema.>>>” } TARGET PROMPT TO EVALUATE

PROMPT_TO_EVALUATE: “””[PASTE YOUR PROMPT HERE]”””

For the simplest of prompts, I’ll often get a laundry list of output flags and that’s even with years of experience writing and reviewing prompts.

Compress Compress Compress

The final step is to compress the prompt. Compression similar to minifying javascript on a site doesn’t do much if it’s a prompt you’re running a few times but if it’s a prompt you’ll potentially run millions of times, then reducing unnecessary characters, words, phrasing can save thousands of dollars in the long run.

Compression is both removing words and optimizing order of operations so that prompts run quickly and efficiently.

What does compression look like?

Let’s take a basic prompt

“Write a 200 word summary of Apple’s iPhone 17 launch event. Only talk about the new products and updates introduced and don’t talk about influencer or market reactions. Write it from a neutral point of view and don’t overly praise or criticize their launch event”

It’s pretty well defined but notice how we ask for output in the first sentence then tell it additional refinements in the following sentences? Those can create invisible loops where a reasoning path starts only to then be redirected based on subsequent instruction.

Second we use phrases like launch event multiple times which may not seem important if we’re running a prompt once but if this is a foundational prompt for a Saas product and it will be run millions of times, it’s going to waste thousands of dollars in tokens reading the same words twice.

Here’s the compressed prompt:

Write 175-225 words in neutral plain english covering only new products and updates from Apple’s iPhone 17 launch event.

It’s to the point, efficient and doesn’t restate words multiple times. One key point is not to over compress and accidentally remove key guardrails. Always error on the side of caution and again if you aren’t going to be using the same prompt multiple times then compression may not be necessary.

Putting it All Together Using the Gallahue Method

Consider this prompt for someone looking to write a nutrition guide. I’ve started it at a base level with no definition, guiding or review:

“Hey ChatGPT I need you to write a 600 word article about Kale. I want you to cover kale from three different angles. The first is a overview of what kale is and why it’s considered a superfood due to nutrient density, vitamins A,C, and K, fiber, antioxidants and how those are important for heart, bone and eye health. Second can you cover common diets that use a lot of kale like vegan, keto, paleo and mediterranean diets and finally can you give four examples of how someone can use kale in their day to day cooking? Ideally raw salads, sautéed dishes, smoothies and kale chips.”

On the surface it seems basic and self-explanatory but if I’m generating the same output for 30-40 foods or ingredients, there’s inefficiency that will slow it down and may not lead to the best output.

Here’s the optimized version that removes ambiguity and provides specific instruction for output:

Write 550–650 words in plain English about kale. Output only the article text with exactly three H2 headings: Overview, Health Benefits, Diet Uses.

Cover: what kale is and common varieties; why it’s considered a “superfood” (nutrient density—vitamins A, C, K; fiber; antioxidants—and key benefits for heart, bone, and eye health); and how to use it across diets (Mediterranean, vegan/vegetarian, keto, paleo) with four brief prep examples (raw salad, sautéed, smoothie, baked chips).

Keep a neutral, informative tone. Avoid fluff, anecdotes, lists, citations, or calls to action.

It’s to the point, specific with output and leaves no doubt as to what the LLM needs to generate in order to satisfy output requirements. Using my methodology on your prompts going forward will only serve to improve your own understanding of how LLMs reason output but also improve overall delivery of material.

In closing …

It’s important to realize no prompt will be perfect the first time you run it and in fact many of the prompts I work on have gone through 30-60 revisions in staging before they ever make it to production.

Think of this process as a debugging step for human language and it will all make sense.